What are the most effective instruments for net scraping?

Content

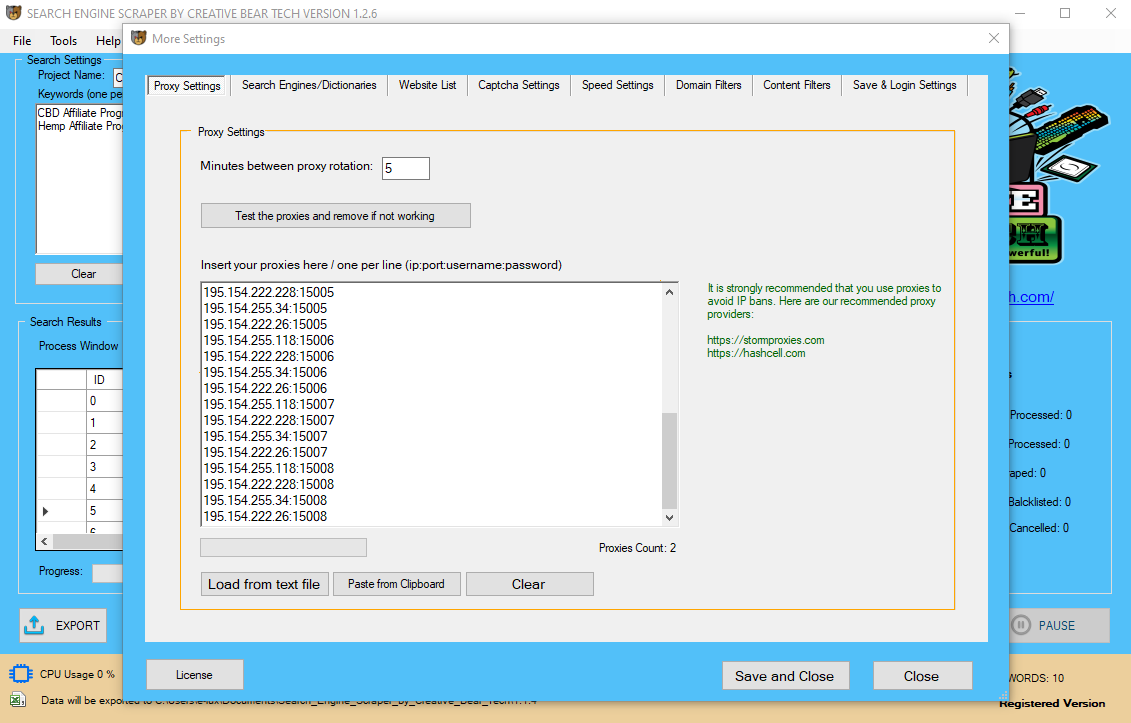

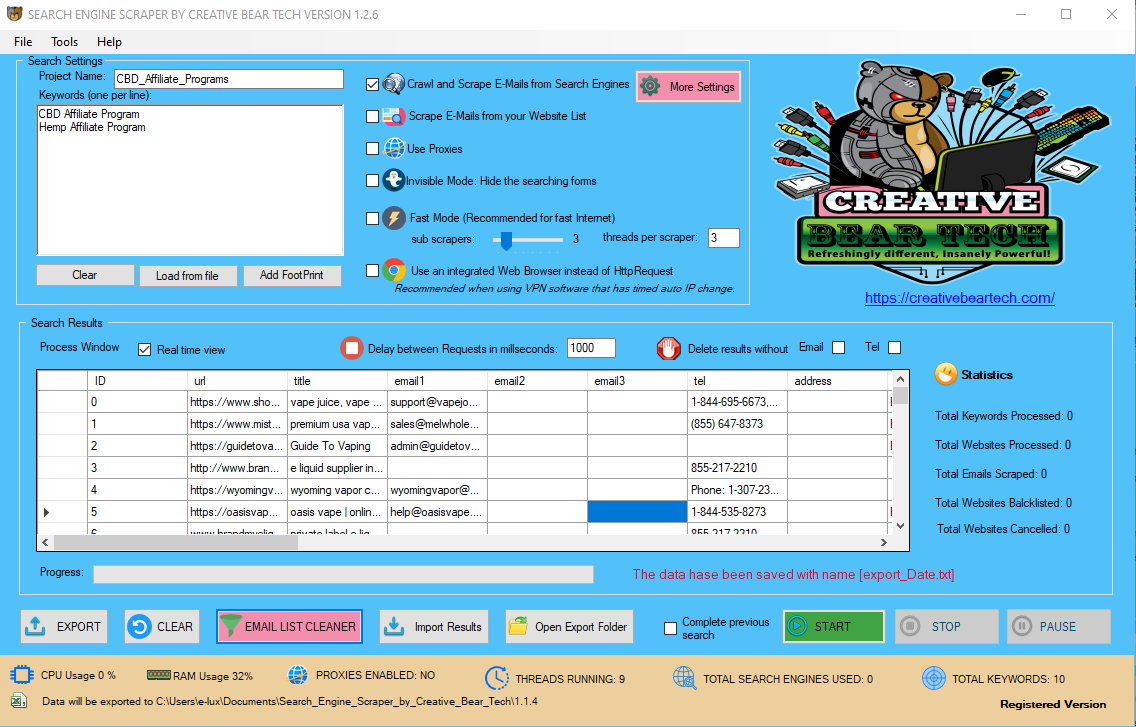

Our services make requests using hundreds of residential and non-residential rotating proxies throughout the World to provide the most effective scraping experience and match all the enterprise wants. Making a excessive volume of requests to target web sites & scrape knowledge using a devoted proxy pool without the concern of being banned. When websites mix consumer brokers, IP addresses and other knowledge about a person, it is called gadget fingerprinting. If you change IPs but your data scraper at all times leaves the same fingerprint, your scrape will be detected and you might get into a honeypot. But generally, proxies which are undetectable and unblockable are the most effective.

Our services make requests using hundreds of residential and non-residential rotating proxies throughout the World to provide the most effective scraping experience and match all the enterprise wants. Making a excessive volume of requests to target web sites & scrape knowledge using a devoted proxy pool without the concern of being banned. When websites mix consumer brokers, IP addresses and other knowledge about a person, it is called gadget fingerprinting. If you change IPs but your data scraper at all times leaves the same fingerprint, your scrape will be detected and you might get into a honeypot. But generally, proxies which are undetectable and unblockable are the most effective.

Faster Data Intelligence With Unlimited Residential Proxies

That means you'll be able to launch a script to send 1,000 requests to any variety of websites and get 1,000 completely different IP addresses. Using proxies and rotating IP addresses together with rotating consumer brokers can help you get scrapers previous a lot of the anti-scraping measures and prevent being detected as a scraper. Smartproxy owns a residential proxy pool with over 10 million residential IPs in it. Their proxies work quite great for web scraping thanks to their session management system. They have proxies that may keep session and the identical IP for 10 minutes – that is good for scraping login-based mostly websites. Webshare does not have excessive rotating proxies, their IP rotation system works based on time, and this may be both 5 minutes or 1 hour. Stormproxies is among the most diversified proxy providers by way of the use cases their proxies are relevant to. Their datacenter proxy pool accommodates over 70,000 IPs, and it's priced based on threads; that’s the variety of concurrent requests allowed. Proxyrack is another residential proxy provider that you can use their proxies for net scraping. While it has over 2 million residential IPs in its pool, only a little over 500,000 is available to make use of at any second.

Use Elite Proxies Whenever Possible If You Are Using Free Proxies ( Or Even If You Are Paying For Proxies )

Once you've the listing of Proxy IPs to rotate, the remaining is straightforward. Let’s get to sending requests by way of a pool of IP addresses. In this weblog post, we will present you tips on how to ship your requests to an internet site using a proxy, after which we’ll present you tips on how to send these requests through multiple IP addresses or proxies. To solve these issues we use proxies for successful requests to access the general public data we'd like. If you do not want to trouble your self with internet scrapers, proxies, servers, Captcha breakers, and internet scraping APIs, then PromptCloud is the service to decide on. With them, you only have to submit your data requirement and wait for them to deliver it – pretty fast, in the required file format. Since the target website you’re sending requests to sees the request coming in from the proxy machine’s IP handle, it has no thought what your original scraping machine’s IP is. As GDPR defines IP addresses as personally identifiable info you have to make sure that any EU residential IPs you utilize as proxies are GDPR compliant. This signifies that you need to make sure that the owner of that residential IP has given their express consent for his or her house or mobile IP to be used as an internet scraping proxy. The primary profit for net scraping is that you understand that no one else is going to be messing together with your price restrict calculations by also making requests to your goal web site through the same IP address. In order to get round this sort of restriction, you can spread a large number of requests out evenly throughout a large number of proxy servers. The major benefit of proxies for internet scraping is you could hide your net scraping machine’s IP handle.

Free Proxy List

They have proxies that are high rotating and change IP Address after every net request. The smartest thing to do is make use of proxy providers that takes care of IP rotation for you. It is also Facebook Groups Scraper necessary I stress right here that residential IP proxies are the best for web scraping. Helium Scraper is another software you should use to scrape websites as a non-coder. You can capture complex information by defining your individual actions – for coders; they will run customized JavaScript information too. When your scraper is banned, it could possibly actually harm your small business as a result of the incoming knowledge flow that you just have been so used to is abruptly lacking. Also, generally websites have totally different info displayed based on country or region. If there are a number of too many requests from a single IP handle, Your IP address shall be blocked from the website that you just're at present scraping. You will be utterly locked out of the website and won't be capable of continue scraping. Monkey socks is a smaller scale operation than many other proxy service providers on this list, and the enchantment reveals this. As a residential rotating proxy service, it’s odd for a company to not list the variety of IP addresses it possesses in its name, distinctive IPs or not. Its dashboard rotates proxies only rotates them primarily based on time or person requests, both of that are much less conducive to internet scraping than a service that works with scraper instruments. Additionally, you may also select region particular IPs to acquire the city/state-particular data out of your goal web sites. Webshare is a datacenter proxy supplier that gives its users free proxies. Aside from their free proxies, they have paid proxies which are faster, elite, and works fairly well for internet scraping. If you could have been studying our article, we do not support the use of free proxies as they usually include some non-favorable clauses. Below are the 3 best residential proxy providers out there right now. NetNut offers the quickest residential proxy network with one-hop connectivity, rotating IPs, and 24/7 IP availability that meets your web scraping and data extraction expectations. You will agree with me that until you're scraping at a really huge scale, this number of proxies is sufficient for you to use. The variety of proxies you need is a operate of the variety of requests allowed on the website inside an hour from a single IP Address and the number of pages you want to scrape. The request limits set by web sites differ from website to web site. In basic, you pay a premium for getting dedicated proxy servers.

ash your Hands and Stay Safe during Coronavirus (COVID-19) Pandemic - JustCBD https://t.co/XgTq2H2ag3 @JustCbd pic.twitter.com/4l99HPbq5y

— Creative Bear Tech (@CreativeBearTec) April 27, 2020

For regular web sites, you should use their high rotating proxies that modifications IP after every request. They have proxies in about 195 international locations and in eight main cities around the globe. These are the IPs of personal residences, enabling you to route your request by way of a residential network. They may be financially cumbersome when you can achieve similar results with cheaper datacenter IPs. With proxy servers, the scraping software program can masks the IP tackle withresidential IP proxies, enabling the software to access all the websites which might not have been available without a proxy. Crawlera manages a massive pool of proxies, rigorously rotating, throttling, blacklists and deciding on the optimal IPs to make use of for any individual request to give the optimum outcomes at the lowest cost. Microleaves is known to have high velocity and competitive pricing packages as well as decent customer service. The greatest resolution 10 Best Email Extractor For Lead Generation to this downside is to make use of a pool of proxies and thus split your requests over a bigger variety of proxies. Depending on the number of requests, target websites, IP kind and quality, in addition to different factors, you can purchase a high quality proxy pool that may absolutely support your scraping periods.  Not solely are these proxies of very low high quality, they can be very harmful. These proxies are open for anyone to make use of, so they shortly get used to slam web sites with big quantities of dubious requests. Inevitably leading to them getting blacklisted and blocked by web sites very quickly. What makes them even worse though is that these proxies are often contaminated with malware and other viruses. Residential IPs are the IPs of private residences, enabling you to route your request by way of a residential network. Usually, we use proxies to masks our IP tackle or to unblock a web site that doesn't work along with your IP address. For scraping tools, You will need a proxy which has a large IP pool and the proxy needs to maintain rotating between these IP's. Whenever you're utilizing an online scraping software, What is does is that it makes multiple and concurrent requests to the web sites utilizing completely different headers to assemble and harvest knowledge from the web site. The drawback that arises is that web sites these days have several restrictions relating to IP's and multiple requests. Its system is kind of practical and can help you handle an excellent number of duties, including IP rotation using their very own proxy pool with over 40 million IPs. Web scraping isn't just about having an nameless residential rotating proxy network. As web sites attempt to lock down data and observe users, there are much more methods that identify a consumer along with IP addresses. Here are some suggestions you must remember earlier than you arrange a scraper with expensive proxies. However, if you need a web scraping proxy to scrape large quantities of knowledge from web sites that generally block datacenter proxies, then residential IPs are your greatest wager.

Not solely are these proxies of very low high quality, they can be very harmful. These proxies are open for anyone to make use of, so they shortly get used to slam web sites with big quantities of dubious requests. Inevitably leading to them getting blacklisted and blocked by web sites very quickly. What makes them even worse though is that these proxies are often contaminated with malware and other viruses. Residential IPs are the IPs of private residences, enabling you to route your request by way of a residential network. Usually, we use proxies to masks our IP tackle or to unblock a web site that doesn't work along with your IP address. For scraping tools, You will need a proxy which has a large IP pool and the proxy needs to maintain rotating between these IP's. Whenever you're utilizing an online scraping software, What is does is that it makes multiple and concurrent requests to the web sites utilizing completely different headers to assemble and harvest knowledge from the web site. The drawback that arises is that web sites these days have several restrictions relating to IP's and multiple requests. Its system is kind of practical and can help you handle an excellent number of duties, including IP rotation using their very own proxy pool with over 40 million IPs. Web scraping isn't just about having an nameless residential rotating proxy network. As web sites attempt to lock down data and observe users, there are much more methods that identify a consumer along with IP addresses. Here are some suggestions you must remember earlier than you arrange a scraper with expensive proxies. However, if you need a web scraping proxy to scrape large quantities of knowledge from web sites that generally block datacenter proxies, then residential IPs are your greatest wager.

- Scraper API rotates IP addresses with every request, from a pool of tens of millions of proxies across over a dozen ISPs, and routinely retries failed requests, so you'll by no means be blocked.

- Scraper API also handles CAPTCHAs for you, so you possibly can think about turning websites into actionable data.

- Their pool is combined with datacenter proxies, residential proxies, and cellular proxies.

- One of essentially the most frustrating parts of automated net scraping is consistently dealing with IP blocks and CAPTCHAs.

They additionally need to be fast, safe and keep knowledge privateness. All of the premium proxy suppliers have proxies which have these qualities, and generally, we'd vote residential proxies are Best Proxies for Web scraping. Also necessary is the fact that they do not work on some advanced web sites like Instagram.

Web Scraping With Proxies: The Complete Guide To Scaling Your Web Scraper

From them, you get cleaned knowledge from internet pages without any type of technical hassles. They present a fully managed service with a dedicated help group. Hardly would you hear of internet scraping with out the mention of proxies, especially when done at an affordable scale and not just scaping a couple of pages. For the skilled net scrapers, incorporating proxies is easy, and paying for the service of a proxy API for net scraping may be an overkill. What makes them excellent for internet scraping except for being undetectable is their high rotating proxies that change the IP Address assigned to your internet requests after every request. If you personal your personal residential IPs then you will want to deal with this consent yourself. If you're planning on scraping at any affordable scale, simply buying a pool of proxies and routing your requests via them likely received’t be sustainable longterm. Your proxies will inevitably get banned and stop returning high quality data. As a general rule you all the time stay nicely away from public proxies, or "open proxies". Luminati is arguably the best proxy service supplier out there. It additionally owns the most important proxy network on the planet, with over seventy two million residential IPs in Luminati proxy pool. Interestingly, it's compatible with a lot of the popular web sites on the Internet today. Luminati has the most effective session management system because it permits you to decide on the timing for maintaining periods – it additionally has high rotating proxies that change IP after each request. The fact is, except you might be utilizing an online scraping API, which is generally considered costly, proxies are a should. When it involves proxies for net scraping, I will advise users to make use of proxy providers with residential rotating IPs – this takes away the burden of proxy administration from you. With over 5 billion API requests handled each month, Scraper API is a force to reckoned with in the net scraping API market.  There are no many datacenter proxy pools out there as we now have many residential IPs. Both Smartproxy and Luminati pricing are primarily based on bandwidth. Smartproxy has high rotating proxies that change IP after each request, which makes it excellent for web scraping. If you want a session maintained, you can do that for 10 minutes with their sticky IPs. I ones labored on a gig to scrape the death knowledge for Game of Throne, and I received that carried out for all circumstances of demise with out utilizing a proxy.

There are no many datacenter proxy pools out there as we now have many residential IPs. Both Smartproxy and Luminati pricing are primarily based on bandwidth. Smartproxy has high rotating proxies that change IP after each request, which makes it excellent for web scraping. If you want a session maintained, you can do that for 10 minutes with their sticky IPs. I ones labored on a gig to scrape the death knowledge for Game of Throne, and I received that carried out for all circumstances of demise with out utilizing a proxy.

I was in a position to do this as a result of all of the information is loaded directly, but you want JavaScript to render each. I even have had other expertise of scraping small sites and some numbers of pages with out utilizing a single proxy server. However, identical to Luminati, its pricing can also be seen as costly. Without missing words, I can boldly tell you that Luminati is the most effective proxy service supplier out there proper now – and different sources verify that. This is as a result of Luminati having some key essential features that many different providers lack. Take, as an example, within the space of web scraping; it has a good session management management system that is second to none and provides you control 100%. You can make this listing by manually copy and pasting, or automate this by using a scraper (If you don’t need to undergo the effort of copy and pasting each time the proxies you have gets removed). You can write a script to seize all the proxies you need and construct this record dynamically each time you initialize your internet scraper. Large proxy services utilizing datacenters for rotating proxies may have 1000's and 1000's of IP addresses running at a single time from one datacenter. Rotating proxy service is the IP rotation service provided by most reputable residential and datacenter proxy suppliers. When talked about on rotating proxy suppliers’ web sites, think of backconnect as a service, as a result of it provides the consumer with great convenience.

Vitamins and Supplements Manufacturer, Wholesaler and Retailer B2B Marketing Datahttps://t.co/gfsBZQIQbX

— Creative Bear Tech (@CreativeBearTec) June 16, 2020

This B2B database contains business contact details of practically all vitamins and food supplements manufacturers, wholesalers and retailers in the world. pic.twitter.com/FB3af8n0jy

One of probably the most irritating parts of automated internet scraping is constantly coping with IP blocks and CAPTCHAs. Scraper API rotates IP addresses with each request, from a pool of hundreds of thousands of proxies across over a dozen ISPs, and automatically retries failed requests, so you will by no means be blocked. Scraper API additionally handles CAPTCHAs for you, so you can think about turning web sites into actionable knowledge. From its name, you possibly can tell that it's a tool for net scraping. If you are extracting knowledge from the net at scale, you’ve in all probability already figured out the answer. The website you might be targeting may not like that you're extracting knowledge even though what you might be doing is totally ethical and authorized. If you're a net scraper you need to at all times be respectful to the web sites you scrape. So long as you play good, it's much much less doubtless you will run into any legal issues. The different strategy is to use clever algorithms to automatically handle your proxies for you. Here the best choice is a solution like Crawlera, the good downloader developed by Scrapinghub. With a simple workflow, utilizing Helium Scraper isn't solely straightforward but in addition fast because it comes with a easy, intuitive interface. Helium Scraper can be one of the web scraping software program with a great number of features, including scrape scheduling, proxy rotation, textual content manipulation, and API calls, among other options. ScrapingBee makes use of a large pool of IPs to route your requests via and avoid getting banned. This proxy API supplier has a proxy pool of over forty million IPs. Their pool is blended with datacenter proxies, residential proxies, and cellular proxies. One thing I like about Scraper API is that it provides support for fixing Captcha. Aside from this, it additionally has assist for dealing with headless browsers and allows you to enjoy unlimited bandwidth. A rotating proxy is a proxy server that assigns a new IP address from the proxy pool for each connection.

Beauty Products & Cosmetics Shops Email List and B2B Marketing Listhttps://t.co/EvfYHo4yj2

— Creative Bear Tech (@CreativeBearTec) June 16, 2020

Our Beauty Industry Marketing List currently contains in excess of 300,000 business records. pic.twitter.com/X8F4RJOt4M

Also, I even have labored on initiatives that got be blocked and blacklisted, and my system IP Address was the trigger. If you'll be able to afford to, it’ll make your life a lot easier when you add a safety a number of of 2-3x to that quantity so that you just’re not continually bumping into fee limits. So for the 100,000 requests per hour, I’d suggest utilizing about proxy server IP addresses. Scraper API is utilized by a good number of developers around the globe. It can also be fast, reliable, and supplies a free trial possibility – just like Crawlera. Unlike most proxy providers, each proxy scraper API makes use of allows for unlimited bandwidth, which means you are charged only for successful requests. This makes it much easier for purchasers to estimate usage and maintain prices down for giant scale internet scraping jobs.

NOW RELEASED! ???? ???? ???? ???? Health Food Shops Email List - B2B Mailing List of Health Shops! https://t.co/ExFx1qFe4O

— Creative Bear Tech (@CreativeBearTec) October 14, 2019

Our Health Food Shops Email List will connect your business with health food stores locally, nationally or internationally. pic.twitter.com/H0UDae6fhc

It also helps out in handling headless Chrome, which isn’t a easy thing, particularly when scaling a headless Chrome grid. Scraper API takes care of a host of issues similar to proxies, browsers, and Captchas – so that you don’t need to. With Scraper API, all you need to do is ship a simple API name, and the HTML of the page is returned to you. Free proxies are inclined to die out quickly, largely in days or hours and would expire earlier than the scraping even completes. To prevent that from disrupting your scrapers, write some code that would automatically decide up and refresh the proxy list you employ for scraping with working IP addresses. With Crawlera, as an alternative of having to handle a pool of IPs your spiders just ship a request to Crawlera's single endpoint API to retrieve the specified information.

Women's Clothing and Apparel Email Lists and Mailing Listshttps://t.co/IsftGMEFwv

— Creative Bear Tech (@CreativeBearTec) June 16, 2020

women's dresses, shoes, accessories, nightwear, fashion designers, hats, swimwear, hosiery, tops, activewear, jackets pic.twitter.com/UKbsMKfktM